Faster Horses, Better Software

Software today is far more broken than most people realize. It didn't have to be this way, and it still doesn't. It's time to radically rethink software.

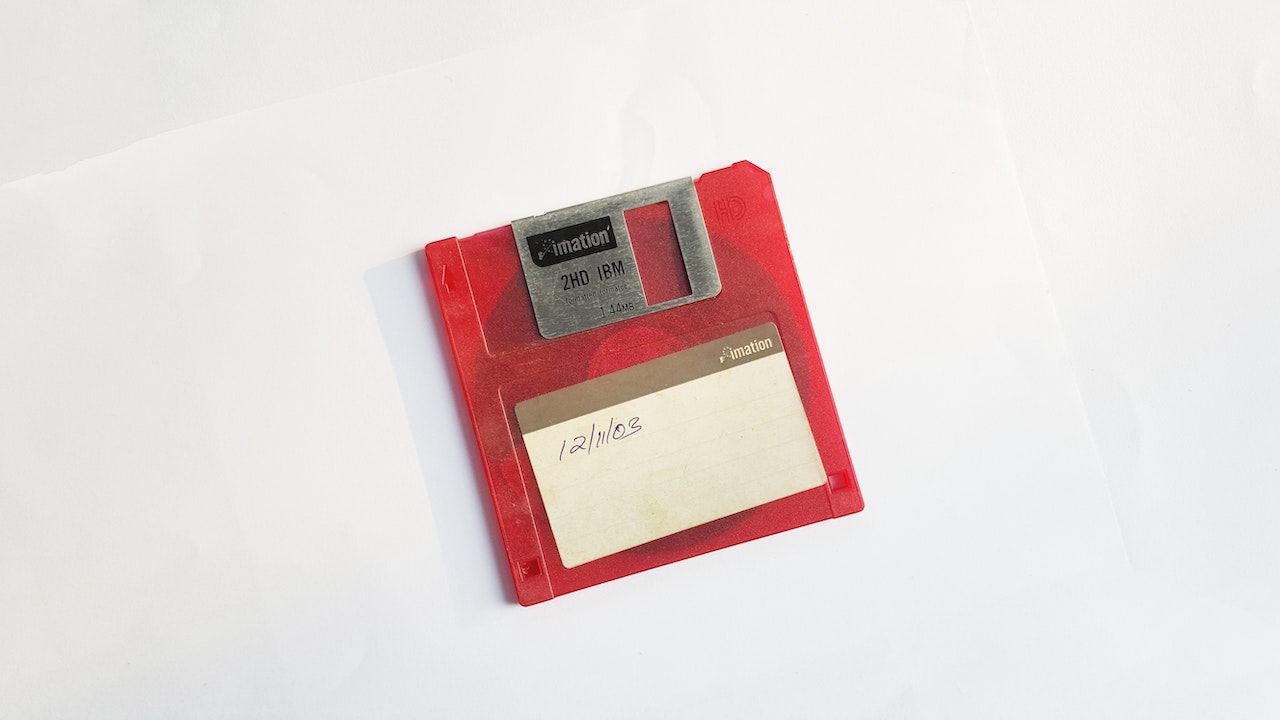

It's hard to believe today, but software used to come on floppy disks like this one. As basic as that software was, I'm not entirely convinced software today is better. Photo by Fredy Jacob on Unsplash

One of my favorite quotes about innovation is the one oft-repeated and misattributed to Henry Ford, but nevertheless insightful: “If I had asked people what they wanted, they would have said faster horses.”

Software today is like horses were in Ford’s day. It’s a technology that’s ubiquitous, cheap, and one that works pretty well and solves real problems. Like horses, software mostly gets you where you need to go with a reasonable degree of speed and comfort. If you ask most people today what they want from software and digital technology, they’d probably say something like faster apps, faster downloads, or larger, cheaper data plans. In other words, faster horses.

Of course, what the world needed in Ford’s day was not faster horses, more horses, nor cheaper horses, but rather a paradigm shift in transportation.

By the same token, today, the world needs a paradigm shift in software and computing. My answer to Peter Thiel’s Zero to One question is: Software today is completely broken. It’s taken us about as far as it can, and if we want to continue to advance the state of the art of computing, we must totally reimagine the computing and software stack: we need a Model T to software’s tired horses. Of course, most people don’t realize this—most people are probably pretty happy with their software, and would probably remark if asked that software has gotten better and better. This is only partially true, as I’ll explain below.

How software is broken

Software is broken in a huge variety of ways: some technical, some social, some economic. The first and most obvious example is the phenomenon of surveillance capitalism. This is one of the only aspects of the brokenness of software that people do talk about. However, only a minority complain about it, and an even tinier minority do anything about it. Others have told this story better than I can here, but in brief, before the era of broadband and software as a service, we used to actually buy software, which came in shrink-wrapped boxes. It may have been expensive and the update cycle may have been slow by modern standards, but at least it didn’t spy on us. We used to run it on machines that we owned and controlled, and we used to have control of the software and of the data, too. That all changed with the advent of the Internet and the Web. We subsequently made a Faustian bargain to sell our data, our behavior, and our digital selves in exchange for ostensibly “free” software as a service.

What software used to look like. (Source)

We can all agree that we no longer have control of the software we run in any meaningful sense—and if you don’t understand how a technology or a product works, then in effect it’s running you, not the other way around. Our software serves the companies that build and operate it, not us. This is in fact a perversion of the original idea of software. The original concept of software was that of a digital agent designed to help the user. Software has morphed from a Jobsian bicycle for our minds into a hamster wheel, a prison, a panopticon—pick your favorite dystopian metaphor—where we’re all performing work for the algorithms and for our digital overlords without even realizing it (and, obviously, not getting paid for that work). It wasn’t supposed to be this way.

We no longer have control over the software we run. We have no control over our data or over our digital identities, and we can only access our data when the digital overlords decide to allow us to, when it’s in their interest to let us.

As bad as that already sounds, the situation is in fact far worse. If it were the case that, in spite of these failings, our software worked well; if it were the case that it were well designed, innovative, and reliable, then, at the very least, we would know that we had gained something from the Faustian trade. In fact, none of these are true: the sad truth is that we not only sold our digital souls, we did so for a trifle.

With very few exceptions, the software we run today is exceedingly fragile.1 More user-facing software is written using JavaScript than any other language—and JavaScript is a language that doesn’t even handle equality well, much less enabling the finesse that writing software used to require, such as fine-grained memory management. Don’t get me wrong, I’m all for democratizing software development. Anyone should be able to hack something together easily at a hackathon, and that’s much easier to do in JavaScript than in C. But there’s software, and then there’s software—an app that’s run by millions or billions of users should be expected to work, and to just keep working. Rather than trying to figure out how to write better software, we instead assume that all software is malicious and try to sandbox it better so it doesn’t destroy our computer. To put this in perspective, it would be like focusing exclusively on better quarantine measures rather than trying to develop a vaccine for the novel coronavirus.

There’s data breaches, outages, and hacks. Going slightly deeper, there’s the fragility of the software stack and bugs that lie buried deep in code that everyone relies on but no one (other than hackers) has looked at for decades. There’s cancel culture, deplatforming, shadow bans, and various other forms of censorship. Big, unaccountable tech firms can delete your digital property, remove your access to your files, cut off your voice and your income, and effectively erase your digital identity, all without due process, without notifying you, and without even the possibility of appeal. (Of course, if your files are stored on their servers, they’re not really your files in the first place.)

As if that weren’t all bad enough, modern software just doesn’t actually work that well. It’s not that user friendly. New features aren’t added very often, for fear of upsetting existing users. It’s not that innovative anymore: it’s like all the innovation happened in the nineties and aughts, and hasn’t moved much since then. It’s a case of the emperor’s new clothes, where everyone acts enraptured by the latest photo- or video-sharing app, because everyone else seems to be, and no one notices how bad things are or considers how much better they could be.

Here are some more examples: Apps that are incredibly slow. Pages that rerender and buttons that move before you can click on them. Fragile maps that lose state when you touch them. The need to constantly set passwords using arcane, arbitrary rules, remember passwords, reset passwords, change passwords, re-authenticate yourself, get codes by SMS (itself vulnerable to SIM swapping) and click verification links in emails. Settings, preferences, active logins, and application state not synchronizing cleanly across devices, or not appearing on new devices. Sitting and watching a spinning wait icon as an app loads data slowly, or fails entirely to load data. Deep links that don’t work and apps that don’t let you hit “back” to go where you want to go. “Share” menus that look different and icons that move around from app to app. Apps that don’t work offline. Apps that don’t save state when you briefly switch away from them. Apps that work on one platform but not another. Apps that are poorly designed, with frustrating, clumsy interfaces that you cannot change. Apps that work for months, then one day just stop working. Inaccurate GPS. Data that isn’t portable. Apps that work at one screen resolution or shape, but break totally if you rotate or resize the screen just a little. Dialog boxes that mysteriously vanish. Broken notifications. Too many notifications. Notifications that are on by default. Apps that require access to all of your files, your camera, and your microphone just to run. Ads, popups, popunders, popovers, ads, oh and did I mention ads? Animated ads, blinking ads, repeated ads, ads that choke a page so completely that you’re lucky to see one or two lines of content, ads that make web pages painfully slow to load and drain your device battery as a result. Retargeted ads that creepily follow you from platform to platform and device to device. Ads built into the operating system that appear periodically and cannot be disabled. Paywalls. Clickbait. Newsfeeds full of junk we aren’t interested in and don’t want to see. Hundred-page end user license agreements that literally no one reads, but that you must agree to before you can use an app. I could go on. I could do this all day.

This is what the arms race of attention has reduced the Web to: web pages drowning in ads. If you don't see an image above, your browser's ad blocker has blocked it! (Source)

I challenge you to pay really close attention for just one day. Try to notice every time an application you’re using fails to do something, does something frustrating or unexpected, or gets in the way of something you’re trying to do. This isn’t as easy as it sounds. We’ve all become so inured to bad software that we tend not even to notice it. Try this exercise and I guarantee you’ll be surprised.

Digital spaces

We spend a lot of our time in front of screens. The devices, operating systems, and applications we use are effectively the digital spaces where we spend our time. These digital spaces are as important to us now as our physical spaces, and this importance is only going to continue to increase over time.

What’s the first thing you do when you move into a new space? You open the curtains. You put down a carpet. You hang some art on the wall. You place objects that spark joy and bring back happy memories. You install furniture that suits your life (and work) style. Customizing our spaces is what humans do in order to make them more comfortable and more useful. It’s also how we make a space feel like our space, or like a home. This has always been possible in physical spaces, from small customizations like hanging art or moving furniture to larger ones like knocking down walls or expanding a space.

Despite the fact that we spend more and more time in digital spaces, this has not been possible with our digital spaces for a long time. To the extent that you can customize digital spaces, such as choosing a background for your social media page or switching an app to dark mode, it’s in a highly circumscribed fashion, controlled by the software publisher (and they often cost money). They give the veneer of control while not letting us actually change things like functionality.2

We’ve been living our entire digital lives in a series of digital hotel rooms—where the menu of options is, at most, “single or double, low floor or high floor”—and we don’t even realize it, because we’re unaware that there’s an alternative.3,4

Even on the Web, which was designed as a more open platform specifically to make this sort of change easy—a web browser used to be called a “user agent” for a reason, after all—this has become very difficult.5 That’s because your web browser doesn’t work for you, it works for the company that made it.6

How did this happen?

How have we come to this? To attempt to do justice to this interesting question would require a separate article, or really, a book.7 Over the past twenty years, clever tech firms saw what we wanted, and gave it to us—a case of “be careful what you wish for.” To stretch the prior metaphor a little bit, they gave us ever fancier, ever larger, ever more well-appointed digital hotel rooms, and we ate them up. Nevermind that we never really had any privacy inside of them, or that they were filled with ads and content that we never asked for.

Why did we allow this to happen? I can think of several reasons. For one thing, digital technology is very new. We’re still coming to terms with it, getting used to it, and understanding what it’s capable of. To many users, it did and to a large extent still does feel arcane. Many users feel that they need a high priest to explain tech, to package it up and make it approachable. Big tech firms were more than happy to fill that role. Firms beginning with AOL and Microsoft exploited this. They took advantage of the fact that most users care more about simplicity and usability than they do about things like privacy, and offered a curated, “walled garden” experience with guard rails. For another, there were enormous capital costs associated with building and running apps and Internet services, which led to some degree of centralization around the few companies with the necessary resources and expertise. (Fortunately, these costs have come down considerably in recent years.)

Another reason is network effects and monopoly. The importance and force of network effects in technology cannot be overstated. Products like Google’s Gmail literally never need to improve because of platform and network effects and the number of people locked into using it. This allows Google and firms like it to move further to the right on Chris Dixon’s “Attract -> Extract” curve. (And it will work until, eventually, someone comes along with a radical innovation that actually disrupts email. Email has been surprisingly resilient, but it will happen one of these days.)

For another reason, until pretty recently, software felt like a fun novelty to most people. It felt more like a game than work or self expression. It didn’t feel central to our identities, our sense of selves, and our social lives, until, more recently, it suddenly did. Big tech firms foresaw and took advantage of this before everyday users understood what was happening, a case of the frog boiling slowly in water and not realizing it until it’s too late. We thought we were the customer. All along, we’ve been the product. We’ve been gorging at the table without realizing that we are the main course.

Free sounded too good to be true—who would pass up the chance to move into a fully-furnished hotel room, full of digital conveniences, where they could live rent-free? If we continue on this path, however, it’s pretty clear where it leads.

To be clear, for most of human history, most people did not have physical spaces they could customize. Many still don’t. The arc of the human story is long, but it bends towards decentralization, personalization, and self-sovereignty. What played out in physical spaces is just beginning to play out in digital spaces.

Why now?

So what’s changed? Why is now the time to have this conversation? Why are we suddenly capable of reclaiming our digital self-sovereignty, of escaping from the mega-tech-hotel, of taking control of and customizing our spaces, when we’ve been incapable of it for the past few decades?

Whether we’re capable of it or not, the current situation is untenable. As described above, we’ve put far too much power into the hands of a tiny coterie of unaccountable, for-profit companies and I cannot see this situation continuing for much longer. Private American firms have even begun to censor sovereign states. People are increasingly fed up with the situation, and as we come to understand the real value of data and how central it is to our lives, society, and markets, we won’t put up with it for much longer.

Another reason is that the exponential improvements we’ve seen in computer hardware for the past few decades is also increasingly unsustainable. A lot of the perceived improvement in software has in fact come about as a result of exponentially better hardware: faster processors, faster networking equipment, cheap storage, etc.. To some extent software has gotten a free ride: better hardware has allowed us to be lazy about building better software, and to assume that there will always be plenty of memory and processing power. However, these trends cannot continue forever. As we rapidly approach the fundamental limits of hardware technologies such as integrated circuits, now is an excellent time to rethink how we design and build software.

For another thing, we have much more powerful tools at our disposal. We have radical new ideas and new models, like blockchain and P2P, to build on top of. These did not exist and/or weren’t proven until quite recently. They’re powerful primitives. These technologies in turn could not have worked before we had lower-level building blocks such as ubiquitous, fast, wide, always-on Internet connections. We needed standards to emerge before we could use them as a springboard to imagine and build even more powerful technologies. In particular, the emergence of certain enabling technologies such as git, Merkle trees, PKI, and especially virtualization have enabled new modes of computation and coordination. It’s now possible to run a usable operating system inside of another operating system, something that wasn’t possible a few years ago.

There are also many more tech savvy people in the world today than there were at the advent of the Internet. Thanks to cloud computing it’s also gotten much cheaper and easier to run your own Internet infrastructure. You can run a private, personal virtual server for $5 per month, well within the reach of many millions, if not billions, of humans. Developing applications has also gotten easier and faster as programming languages, frameworks, software stacks, and online resources have proliferated.

Finally, while we got off to a slow start, we’ve recently figured out how to organize, finance, build, operate, and govern massive, complex infrastructure, as complex as anything run by the tech giants. The open source movement has picked up considerable pace in recent years, and it’s just getting started. Linux is the first and still the best example of what open source is capable of. Linux did not cause Microsoft, Apple, or Google to go out of business—that was never the intention of the project, and in fact, these firms all rely on Linux to a greater or lesser extent—but it did offer an important, realistic alternative to centralized, closed source, expensive software. Alternatives and consumer choice are powerful because they introduce competition. It’s not immediately obvious how to make money on open source projects and how to make them sustainable, but new business models have emerged and many examples continue to prove that it’s viable. The most interesting, innovative software in the world today is being built by collectives, not corporations.8

What do we do about it?

Given the untenable situation in which we find ourselves, and the tools and ideas we now have at our disposal, what are some steps we can take today to begin improving things? This could also easily be the subject of its own article or book, but here are a few possible approaches in brief.

Alternative business models

The dominant business model that’s emerged for software is advertising-supported access to software as a service that’s free for the end user, published and hosted by a third party, running as a packaged app or in a web browser. This model clearly works and it should continue to be an option for those, including content producers and consumers, who wish to continue to access digital apps and services for free in exchange for viewing ads and having their data mined.

However, more and more consumers are becoming privacy-conscious and/or want more control over their software and data. These users may care more about software that is “free as in freedom” than they do about software that’s “free as in beer.” In other words, they may be willing to spend a few dollars a month to access apps, services, and content that give them a better experience, and one over which they have more control. The growing popularity of platforms like Patreon and Substack prove this.

For users such as these, we need to explore alternative business models. In order for software and computing to work for us rather than for big tech companies, we need to take a more active role in where and how our software runs. This might involve paying for a base level of compute service—the way we already pay for utilities such as heat, water, and electricity, not to mention Internet access!

If Bitcoin has shown us one thing, it’s that radically different business models have a role to play in reimagining software. There is no company, nor even a foundation, that built, maintains, or provides support for Bitcoin. The network itself is the sovereign organization, and it effectively bootstrapped and maintains itself. A large, important, valuable global network sprung into existence of its own accord because the incentives were right.

Novel business models, like all cutting edge innovation, may not make sense at first glance. We should nevertheless keep an open mind, run many experiments, and look for innovation to come out of left field.

There is a big picture

In order to realize a cohesive Web3 vision, lots of disparate ideas need to coalesce and lots of distinct projects need to find a way to fit together. The Web is testament to the power of openness, standards, and collaboration to promote innovation. It emerged precisely at a time when the incumbent tech leaders, firms such as AOL, Microsoft, Compuserve, and Prodigy, were trying to colonize and balkanize the early Internet. The Web exists because of the vision and boldness of organizations like Netscape that chose not to patent the technologies they developed. If Microsoft had had its way and had not faced competition from Netscape (and, later, Apple and Google), the Web would not have been open. Of course in retrospect we can see clearly that an open Web was the right way forward since it led to a singular, standard platform that runs on every device and that’s relatively easy to develop on, which in turn led to an explosion of innovation.

We should not lose faith despite the fact that the following generation of tech giants largely, ultimately, sealed off the Web into the very walled gardens that its pioneers were trying to avoid. There is still a great deal of openness, from the standards developed and promulgated by organizations like the W3C and IETF, to the open protocols that still undergird the Internet and the Web, to the burgeoning open source movement and platforms like GitLab, GitHub, and StackOverflow that facilitate it. This openness means that it’s much easier to build software today than at any point in history.

However, we’re seeing the same balkanization of the early days of the Web in spaces like blockchain today. By and large these platforms rely on proprietary technology rather than developing shared standards, and as a result they are mostly incompatible and don’t share data or tooling. This is perhaps unavoidable, as many visionaries have competing, largely self-serving visions for what Web3 can or should look like.9 It’s a process that needs to play out with the emergence of any brand new technology.

At the end of the day, blockchain is just one primitive of many that will be required to bring Web3 to life. It has an essential role to play, but so do many other technologies. For those of us working on Web3 technologies, it’s essential that we stay honest and humble. It’s important that we zoom out and understand the larger context of what we’re all working towards and the values that unite us, and of how our piece fits in, rather than becoming hubristic or self-congratulatory. The Internet, the Web, computing and software in general today are far too complex to be dominated by one new technology or one team’s vision. Any comprehensive Web3 vision worth its salt has to account for this diversity and breadth.

Remove complexity

There’s irony in the fact that, while we have more tools at our disposal now than ever before and programming has become much easier than it used to be, the underlying systems have become vastly more complex. Today, there are literally tens of millions of lines of code sitting between you and the task you want to accomplish. Even something as simple as reading a text file using a web browser probably involves upwards of 100M lines of code when you account for all of the intervening layers and systems. Each of these lines introduces complexity and possible bugs and exploits.

This complexity has had some interesting consequences for software. It has isolated us from a lot of the messiness going on under the hood, including things like memory management and communicating with hardware, making it relatively easy to hack together simple applications. This has made programming and application development accessible to many more people than ever before, leading to more innovation. At the same time, it has introduced an enormous knowledge gap between system programmers (those responsible for the lower-level systems, such as operating systems, browsers, and blockchains) and application developers. In the early days of computing, to use a computer was effectively to program a computer: all users were by definition programmers, and all programming was hard. Today, thanks to ideas like #NoCode, the trend is the opposite: even many programmers are mere users, increasingly ill-informed about what’s going on under the hood.

The consequences are very real. As discussed above, software has become far more fragile. Whereas software engineering used to be a real discipline—carefully and thoughtfully designing and building things to last, akin to non-digital infrastructure like buildings and bridges—we now treat software more like fast fashion: design and launch quickly, iterate quickly, and expect apps to break all the time. The underlying complexity and the ignorance of fundamentals such as memory management on the part of most programmers today has made software inefficient, unreliable, insecure, and difficult to test and maintain.

We cannot improve things by adding even more complexity. We need to go back to basics. We need to drastically remove complexity. Modern operating systems have ballooned to behemoths with tens of millions of lines of code. Contrast this to the original version of UNIX, which contained only 2500 lines of code, and the original version of Linux, which contained around 10,000!

We need to rethink where we draw abstraction boundaries between hardware and software. Virtualization has an important role to play here. While we probably do need to rethink things from the ISA up, we don’t actually need to start with hardware. We can get pretty far with clever virtual machines. Fortunately, the list of technologies starting at the bottom of the stack is growing, which gives me hope. WebAssembly (Wasm), Urbit, Nebulet, and Arrakis all reimagine the compute stack from the bottom. Wasm and Urbit, in particular, are already pretty mature and can run many real-world applications. If these ideas succeed, a Nock or Wasm chip could follow.

Back to basics

One of the weaknesses of many Web3 platforms is that they don’t go far enough. Most attempt to address one particular use case or fix one problem. Blockchain, for instance, gives us sovereign money and sort of fixes identity, but has very little to say about all of the other things that are wrong with the software stack. It doesn’t even work on mobile, in spite of the fact that the vast majority of software users in the world today primarily rely on mobile.

Rethinking money and identity is a good start, but it doesn’t go nearly far enough. We need to rethink the way we store and distribute files and content more generally. We need to rethink the underlying protocols we use to exchange data. We need to rethink the software and OS stack from the ground up in order to address its inherent complexity and fragility. We need to boldly imagine, invent, and build a future where software works for us rather than against us. There should be no sacred cows. At the same time, we should be mindful not to throw the baby out with the bathwater: we should keep the things that currently do work well.

One project that is taking such a bold, innovative approach, and that has struck this balance well, is Urbit. Urbit is a radical reimagining and reinvention of the entire software stack, from the very bottom all the way up to the application layer, and everything in between. At the same time, Urbit’s interface today runs in a web browser, and it’s built using proven technologies like C and UNIX. It’s still too edgy and a little too hard to use for mainstream users. That’s okay. You can make everyone happy with a photo sharing app. You can’t make everyone happy with a new virtual machine or compute paradigm. That will come in time.

Towards a vision for human-centric computing

When they first emerge, all technologies serve a tiny minority of elites. The first book printed on the printing press was the Bible and it was written in a language, Latin, that was legible only to priests. After the masses had gained the ability to read, write, and publish, the emerging mediums of radio and television were subsequently colonized and exploited by governments, countries, and demagogues to their benefit, and to the detriment of ordinary people. Computing technologies have been no different.

Given how the computer emerged—as a room-sized mainframe that cost millions of dollars and required highly specialized, arcane knowledge to operate—it’s no surprise that, from the beginning, computers and the compute stack more generally have been designed in an institution-centric fashion rather than in a human-centric fashion. The prevalent client-server model, where a powerful, central server handles requests from and stores data on behalf of many users is a vestige of these origins.10

Software has indeed come a long way since its humble origins, and we should celebrate this fact and all of the incredible things that it has enabled. Software continues to eat the world, and things are possible today that were unimaginable even a few years ago. However, this focus on the positive speaks to a lack of imagination. For all of this progress and all of its strengths, software today has some serious design flaws and structural problems. Why are things this way? Does it have to be this way? Must we forever be shackled to software that exploits us, and that doesn’t work the way we want it to—that serves corporations rather than its users?

A lot of the excitement and optimism we feel towards software today is in fact behavior learned from the companies whom it benefits for us to think this way. What would software look like if we could go back to basics and address some of these underlying problems?

Technologies such as P2P file sharing and blockchain have recently turned the existing software model upside down and introduced a new paradigm where all users can participate as equal peers and none holds administrative privileges over a network and its data. This new network topology puts the user back in the driver’s seat. It gives each of us the ability to control our own experience: to customize our digital space and join the digital commons as a fully-fledged citizen, so to speak. While this means that radically novel and better modes of compute are now thinkable, we’re still very early in this transition and much work remains to be done.

It didn’t have to be this way. It still doesn’t. We can still fix it. It’s time for us to think for ourselves, and time to design and build software that serves us.

-

There are, of course, some exceptions. It’s not like we don’t know how to build resilient software. The software that runs airplanes, spaceships, bombs, and hospitals is a bit more robust than average. But we choose not to build most software in a robust fashion, not to prioritize it, or more often, we don’t want to pay what it costs to engineer really good software. ↩

-

Meanwhile, big tech firms like Facebook and Google spend literally billions of dollars a year constantly redesigning their software, moving buttons and tweaking colors, without ever introducing any real innovation. Serious question: when was the last time Google added a single, real, innovative feature to any of their major apps? What are their tens of thousands of engineers spending their time on? Some software needs to keep evolving. But some probably doesn’t: look at IPv4 for instance, or maybe SMTP. Twitter is a good example of a protocol that probably does not need to evolve much further. If Twitter were actually an open protocol, it would probably have been frozen around the time threaded tweets were rolled out. It’s totally absurd to think how many engineers Twitter employs, and how much it pays them, for a product that would be better served if it just stopped changing. (For more on this fascinating topic, see Kelvin versioning.) ↩

-

This helpful metaphor comes from the fascinating Understanding Urbit podcast series. ↩

-

It is theoretically possible to use exclusively software that is both free and open source. You can certainly run Linux. You can use Chromium to browse the web. You can sort of run your own email server, although in practice this has become quite difficult. Beyond this, it gets really hard really fast. It means, by definition, not using any popular social media apps. It means not using a modern smartphone (sort of). It makes collaboration on documents and codebases much harder. It means a lot of inconvenience. It sort of means becoming a social outcast. For the record, I have a great deal of respect for people like Richard Stallman who choose this path in spite of these challenges. ↩

-

“Augmented browsing software” tools such as Greasemonkey and Tampermonkey still exist, but multiple attempts have been made to neuter them and other such tools have been co-opted by corporate publishers. In any case, we do not seem to have developed a culture or a practice of users really filtering, modifying, and displaying web content in the way they want to consume it, rather than how publishers want it to be presented—for now, anyway. ↩

-

Fortunately, at least in the browser space, we have other options. Firefox and Brave are both good choices, as both are free and open source and Firefox, at least, is backed by a non-profit foundation. ↩

-

Indeed, others have written compellingly on this topic. I recommend starting with Jaron Lanier’s Who Owns the Future? ↩

-

A lot of very interesting and important work is being done in this area. Some of the most promising ideas include Exit to Community, Zebras Unite, Open Collective, Gitcoin, and the foundations behind popular products such as Ghost. ↩

-

If you ask me, money and digital property will not be the main primitives that undergird Web3. They’re simply not things that excite mainstream users. For more on this topic, see The Cult of Ownership and What’s It Good For? ↩

-

In practice, today, the server is more likely to be a huge collection of relatively powerful machines spread out across global datacenters rather than one supercomputer, but it makes no difference to the metaphor and the point stands. ↩